Master the 4 Key Machine Learning Algorithms to Boost Your Career and Solve Complex Problems

Unlock the True Power of Machine Learning

Alright folks, let’s talk about machine learning.

If you’re looking to level up in data science and really make an impact, you’ve got to get a handle on these algorithms.

We’re swimming in data these days, and the tech to process it is getting more powerful all the time. Machine learning is right at the heart of this revolution, pushing forward all kinds of innovations across different fields. To get ahead, you need to understand the four big categories of machine learning algorithms—Supervised Learning, Unsupervised Learning, Semi-Supervised Learning, and Reinforcement Learning.

Mastering these will equip you to face a wide range of challenges and seriously boost your career.

Supervised Learning

Supervised learning is one of the most widely used and essential types of machine learning. It involves training a model on a labeled dataset, meaning that each training example is paired with an output label. The goal of supervised learning is to learn a mapping from inputs to outputs, allowing the model to predict the output for new, unseen data accurately.

Explanation

Supervised learning can be divided into two main types: regression and classification.

Regression: This is used when the output variable is a continuous value. Examples include predicting house prices, stock prices, or any other numerical value. Linear regression is a commonly used regression technique, where the model learns a linear relationship between the input variables and the output variable.

Classification: This is used when the output variable is a discrete label. Examples include classifying emails as spam or not spam, identifying the species of an animal based on its features, or determining whether a tumor is malignant or benign. Popular classification algorithms include logistic regression, decision trees, support vector machines (SVM), and neural networks.

Actionable Tip

To improve your supervised learning models, always perform a thorough exploratory data analysis (EDA) to understand the characteristics of your dataset. EDA involves visualizing the data, checking for missing values, identifying outliers, and understanding the relationships between variables. This will help you choose the right features and preprocessing steps, such as normalization or encoding categorical variables.

Common Mistake

A common mistake in supervised learning is overfitting, where the model performs well on the training data but poorly on the test data. Overfitting occurs when the model learns not only the underlying patterns but also the noise in the training data. To avoid overfitting, use techniques like cross-validation, regularization, and pruning.

Cross-Validation: This involves splitting the dataset into multiple subsets and training the model on each subset while evaluating it on the remaining data. This helps ensure that the model generalizes well to unseen data.

Regularization: Techniques like Lasso (L1) and Ridge (L2) regularization add a penalty to the model's complexity, discouraging it from fitting the noise in the data.

Pruning: In decision trees, pruning involves removing branches that have little importance, which helps simplify the model and reduce overfitting.

Surprising Fact

Did you know that decision trees, despite their simplicity, can be powerful models for both classification and regression tasks? When combined in ensembles (e.g., Random Forests), they often outperform more complex models. Random Forests work by training multiple decision trees on different subsets of the data and averaging their predictions, which reduces variance and improves accuracy.

Example

Let's consider a simple example of classifying emails as spam or not spam. In this case, we use a decision tree to determine the classification based on features such as the presence of certain keywords, the sender's email address, and the length of the email.

Feature Selection: Identify relevant features that can help distinguish between spam and non-spam emails. For example, features could include the presence of words like "win" or "free," the sender's email domain, and the length of the email.

Training Data: Collect a labeled dataset of emails, where each email is marked as spam or not spam.

Model Training: Use the decision tree algorithm to learn the relationship between the features and the labels.

Prediction: Apply the trained model to new emails to predict whether they are spam or not.

Below is a diagram of a decision tree used for this task:

In the diagram, each node represents a decision based on a feature, and each branch represents the outcome of that decision. The leaf nodes represent the final classification (spam or not spam).

…

Supervised learning is a powerful tool for making predictions based on labeled data.

By understanding the principles of regression and classification, performing thorough exploratory data analysis, and using techniques to avoid overfitting, you can build accurate and robust supervised learning models.

Decision trees, Random Forests, and other algorithms provide versatile methods for tackling various prediction tasks, from classifying emails to predicting stock prices.

Unsupervised Learning

Unsupervised learning is a fascinating and powerful branch of machine learning that deals with data without labeled responses. Unlike supervised learning, where the model is trained on input-output pairs, unsupervised learning algorithms explore the structure of data to discover hidden patterns or intrinsic structures. This approach is particularly useful in scenarios where labeling data is impractical or impossible.

Unsupervised learning can be divided into two main tasks: clustering and dimensionality reduction.

Clustering

Clustering algorithms group similar data points together based on their features. This is useful for discovering natural groupings in data, such as customer segments, social networks, or biological data classification. Popular clustering algorithms include k-means, hierarchical clustering, and DBSCAN (Density-Based Spatial Clustering of Applications with Noise).

Utilize dimensionality reduction techniques like Principal Component Analysis (PCA) to visualize high-dimensional data and identify underlying patterns before applying clustering algorithms. A common mistake in unsupervised learning is selecting the wrong number of clusters in clustering algorithms like k-means. Use methods like the elbow method or silhouette score to determine the optimal number of clusters.

Surprisingly, unsupervised learning algorithms can often reveal insights that are not apparent in supervised learning tasks. For example, clustering algorithms can identify customer segments that were previously unknown.

Dimensionality Reduction

Dimensionality reduction techniques aim to reduce the number of features in a dataset while retaining as much information as possible. This is crucial for visualizing high-dimensional data and simplifying models, making them more efficient and interpretable. Two widely used dimensionality reduction techniques are Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE).

PCA works by transforming the data into a new coordinate system, where the axes (principal components) are chosen to maximize the variance of the data. This helps in identifying the most important features and reducing the dimensionality without losing significant information. t-SNE, on the other hand, is particularly effective for visualizing complex, high-dimensional data in a lower-dimensional space, such as 2D or 3D plots.

Actionable Tip

To get the most out of unsupervised learning, always start with a clear understanding of your data. Perform exploratory data analysis (EDA) to identify patterns, anomalies, and relationships within the dataset. Visualize your data using tools like PCA and t-SNE before applying clustering algorithms. This will give you a better sense of the data’s structure and guide your choice of algorithm and parameters.

Common Mistake

One common pitfall in unsupervised learning is assuming that the algorithm will automatically produce meaningful clusters or dimensions without proper tuning. Clustering algorithms, in particular, require careful selection of parameters, such as the number of clusters (k in k-means). Use validation methods like the elbow method or silhouette score to determine the optimal number of clusters and avoid overfitting or underfitting the data.

Surprising Fact

Unsupervised learning algorithms can often reveal insights that are not apparent in supervised learning tasks. For example, clustering algorithms can identify customer segments that were previously unknown, leading to more targeted marketing strategies and improved customer satisfaction.

Example

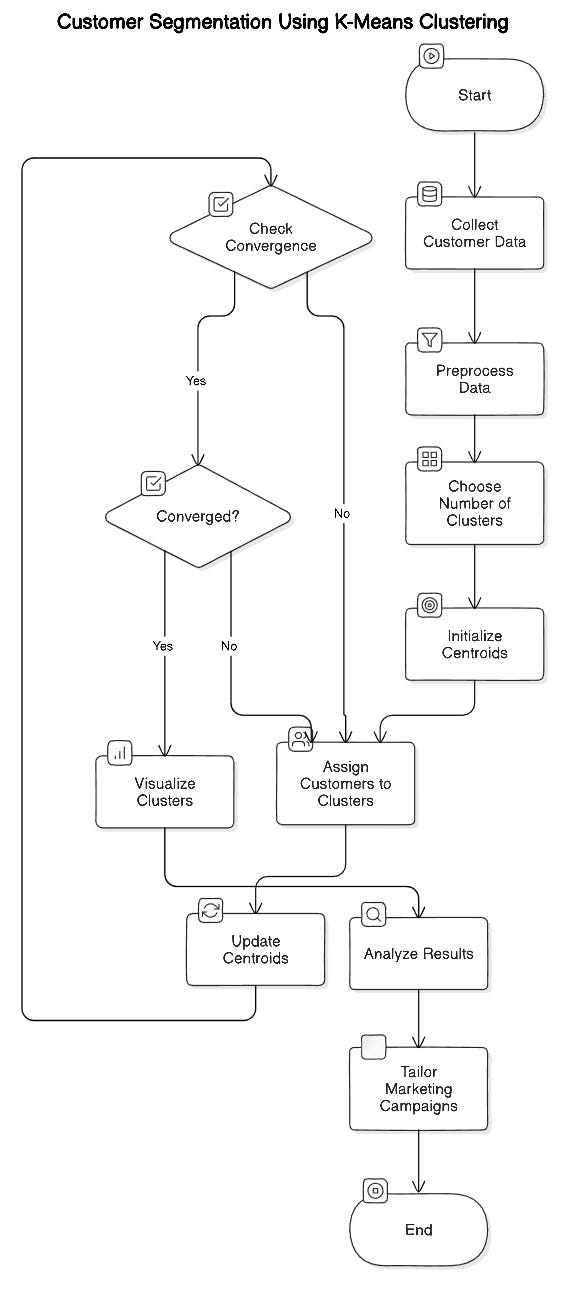

Consider a retail company looking to segment its customers based on purchasing behavior. Using k-means clustering, the company can group customers with similar buying patterns, helping them tailor marketing campaigns and product recommendations. Below is a diagram illustrating the result of k-means clustering applied to customer data:

In the diagram, each point represents a customer, and each cluster is represented by a different color. The centroids of the clusters indicate the average purchasing behavior of customers within each group.

…

Unsupervised learning is a powerful tool for discovering hidden patterns and structures in data.

By mastering clustering and dimensionality reduction techniques, you can uncover valuable insights that drive better decision-making and innovation. Whether you're segmenting customers, simplifying complex datasets, or exploring new data, unsupervised learning algorithms provide a flexible and robust approach to understanding the underlying structure of your data.

Semi-Supervised Learning

Semi-supervised learning strikes a balance between supervised and unsupervised learning by utilizing both labeled and unlabeled data. This approach is particularly valuable when labeled data is scarce or expensive to obtain, but there is an abundance of unlabeled data. By leveraging the vast amount of unlabeled data, semi-supervised learning can significantly improve model performance and make the most out of limited labeled data.

Explanation

Semi-supervised learning combines a small amount of labeled data with a large amount of unlabeled data during training. The primary goal is to use the labeled data to guide the learning process while allowing the model to learn additional patterns from the unlabeled data. This approach can be especially effective in scenarios where obtaining labeled data is time-consuming or costly, such as in natural language processing (NLP) or medical image analysis.

Techniques

Several techniques can be employed in semi-supervised learning, including self-training, co-training, and graph-based methods.

Self-Training: In self-training, the model is initially trained on the labeled data. The trained model is then used to predict labels for the unlabeled data. The most confident predictions are added to the labeled dataset, and the process is repeated. This iterative process allows the model to gradually improve by leveraging the additional data.

Co-Training: Co-training involves training two or more models on different views of the data. Each model is initially trained on the labeled data. Then, each model's predictions on the unlabeled data are used to label new training examples for the other model. This technique can be particularly effective when the data can be represented in multiple ways.

Graph-Based Methods: Graph-based methods represent the data as a graph, where nodes represent data points and edges represent similarities between them. These methods propagate label information from labeled to unlabeled data based on the structure of the graph. One popular graph-based method is label propagation.

Actionable Tip

When dealing with semi-supervised learning, start by ensuring that the labeled data you have is of high quality. Clean, accurate labels are crucial for guiding the learning process. Then, use techniques like self-training or co-training to iteratively label the unlabeled data and improve the model's performance. Validate the model's predictions on a separate test set to ensure that it generalizes well.

Common Mistake

A common mistake in semi-supervised learning is assuming that the unlabeled data is distributed similarly to the labeled data. This assumption can lead to biased models if the unlabeled data contains different patterns or distributions. Always validate this assumption by performing initial tests and adjusting your model accordingly. Another pitfall is over-relying on the model's predictions for labeling the unlabeled data, which can propagate errors if not carefully managed.

Surprising Fact

Did you know that semi-supervised learning can significantly reduce the need for labeled data, sometimes achieving similar performance to fully supervised models with only a fraction of the labeled data? For example, in a study on text classification, semi-supervised learning models achieved accuracy levels close to those of fully supervised models while using only 10% of the labeled data. This demonstrates the potential of semi-supervised learning to maximize the value of limited labeled data.

Example

An example of semi-supervised learning is in Natural Language Processing (NLP) for text classification, where only a small portion of the text is labeled. Let's consider a scenario where we want to classify movie reviews as positive or negative. We start with a small set of labeled reviews and a large set of unlabeled reviews.

Initial Training: Train a classifier on the labeled reviews.

Label Unlabeled Data: Use the trained classifier to predict labels for the unlabeled reviews.

Add Confident Predictions: Add the most confident predictions to the labeled dataset.

Iterate: Repeat the process, gradually expanding the labeled dataset with high-confidence predictions.

Below is a diagram illustrating this process:

In the diagram, the initial labeled data is used to train the model, which then predicts labels for the unlabeled data. The most confident predictions are added to the labeled data, and the process repeats.

…

Semi-supervised learning is a powerful approach that leverages both labeled and unlabeled data to improve model performance.

By combining high-quality labeled data with large amounts of unlabeled data, you can achieve impressive results even when labeled data is scarce. Techniques like self-training, co-training, and graph-based methods provide flexible strategies for incorporating unlabeled data into the learning process.

Whether you're working on text classification, image analysis, or any other data-intensive task, semi-supervised learning offers a valuable tool for making the most out of your data.

Reinforcement Learning

Reinforcement learning (RL) stands out as one of the most dynamic and powerful techniques in the machine learning toolbox. Unlike supervised and unsupervised learning, reinforcement learning is all about training models to make a sequence of decisions by rewarding them for correct actions and penalizing them for mistakes. This approach is particularly effective in scenarios where the model must learn to achieve a goal in a complex, uncertain environment, such as robotics, gaming, and automated trading.

Explanation

Reinforcement learning involves an agent that interacts with an environment by taking actions and receiving feedback in the form of rewards or penalties. The agent's objective is to maximize the cumulative reward over time. The fundamental components of an RL system include:

Agent: The learner or decision maker.

Environment: Everything the agent interacts with.

State: A representation of the current situation of the agent.

Action: The set of all possible moves the agent can make.

Reward: The feedback from the environment, used to evaluate the action.

Policy: The strategy that the agent employs to determine actions based on the current state.

Value Function: A function that estimates the expected cumulative reward from a given state.

Techniques

Several techniques and algorithms are used in reinforcement learning, including Q-learning, Deep Q-Networks (DQN), and Policy Gradient methods.

Q-Learning: Q-learning is a value-based method where the agent learns a Q-value for each action-state pair. The Q-value represents the expected utility of taking a given action in a given state, and the agent aims to learn the optimal Q-values that maximize the cumulative reward.

Deep Q-Networks (DQN): DQN combines Q-learning with deep neural networks. The neural network approximates the Q-value function, allowing the agent to handle high-dimensional state spaces. This approach has been successfully applied to complex problems like playing Atari games.

Policy Gradient Methods: Instead of learning the value of actions, policy gradient methods directly learn the policy, which maps states to actions. This approach is particularly useful in environments with large or continuous action spaces. Algorithms like REINFORCE and Proximal Policy Optimization (PPO) are popular in this category.

Actionable Tip

Start with simpler environments and algorithms like Q-learning before moving on to more complex algorithms and environments in reinforcement learning. Utilize simulation environments like OpenAI Gym to experiment and gain a deeper understanding of RL concepts. These platforms provide a wide range of environments, from classic control tasks to advanced robotics simulations.

Common Mistake

A common mistake in reinforcement learning is not tuning the hyperparameters properly, which can lead to suboptimal policies. Hyperparameters such as learning rate, discount factor, and exploration rate significantly impact the performance of RL algorithms. Invest time in experimenting with different values to find the best combination. Additionally, ensure adequate exploration during training to prevent the agent from getting stuck in local optima.

Surprising Fact

Reinforcement learning has been used to train agents that can outperform humans in games like Go and Dota 2, demonstrating its potential to solve highly complex problems. For instance, AlphaGo, developed by DeepMind, used a combination of supervised learning and reinforcement learning to defeat the world champion in Go, a game known for its immense complexity and strategic depth.

Example with Diagram

Consider training a robotic arm to pick up objects. The RL process involves the following steps:

Initial Setup: Define the environment, including the robot and the objects.

State Representation: Represent the state as the position and orientation of the robotic arm and the object.

Action Space: Define the possible actions, such as moving the arm in different directions.

Reward Function: Design a reward function that provides positive feedback for successful object pickups and negative feedback for failed attempts.

Below is a diagram illustrating the RL process for training a robotic arm:

In the diagram, the agent (robotic arm) interacts with the environment by taking actions (moving the arm), observing the state (position and orientation), and receiving rewards (successful pickup or failure). Over time, the agent learns the optimal policy to maximize the cumulative reward.

…

Reinforcement learning is a powerful tool for training agents to make a sequence of decisions in complex, uncertain environments.

By understanding the fundamental components and techniques of RL, you can build models that learn from their interactions with the environment and improve over time.

Whether you're developing autonomous robots, creating advanced game-playing agents, or optimizing trading strategies, reinforcement learning offers a flexible and robust approach to solving challenging problems.

Wrapping Up

Mastering the four key machine learning algorithms—Supervised Learning, Unsupervised Learning, Semi-Supervised Learning, and Reinforcement Learning—will significantly enhance your ability to solve complex problems and advance your career.

By understanding the principles, avoiding common mistakes, and applying the tips provided, you can harness the power of machine learning to achieve remarkable results. Start exploring these algorithms today and unlock new opportunities in the exciting field of data science.

Dive deeper into each category of machine learning algorithms, experiment with real-world datasets, and continuously refine your skills. Join online communities, take advanced courses, and stay updated with the latest research to stay ahead in your machine learning journey.